Contents

Current Research

Systems and Architectures

Development of sensing and manipulation systems is enabled by a hardware and software infrastructure. The main elements of this infrastructure are:

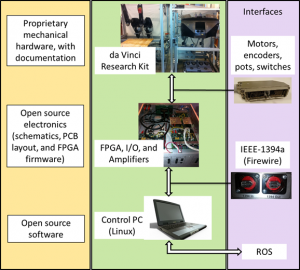

Open Source Controller for da Vinci Research Kit

We have combined the cisst and SAW software with the custom FireWire-based motor controller boards to create an open source controller for first-generation da Vinci systems. This controller has been replicated at more than 25 institutions worldwide (see Google Map). The project has recently expanded, with support from NSF NRI 1637789, to include development of a software infrastructure to support research in semi-autonomous teleoperation using systems such as the dVRK, Raven II, and other robots.

JHU documentation for dVRK system (on GitHub)

Collaborative Robotics organization (on GitHub)

Intuitive Surgical webpage for da Vinci Research Kit community

Machine Learning for Robotic Surgery

With support from NSF AccelNet 1927354, we are coordinating an international effort to advance research in data-driven methods for perception of the surgical environment and state, leading to systems that can provide intelligent assistance to the surgeon or can autonomously execute tasks. The effort will involve the communities that have already formed around shared, open research platforms for medical robotics research, exemplified by (but not restricted to) the da Vinci Research Kit (dVRK) and the Raven II surgical robot.

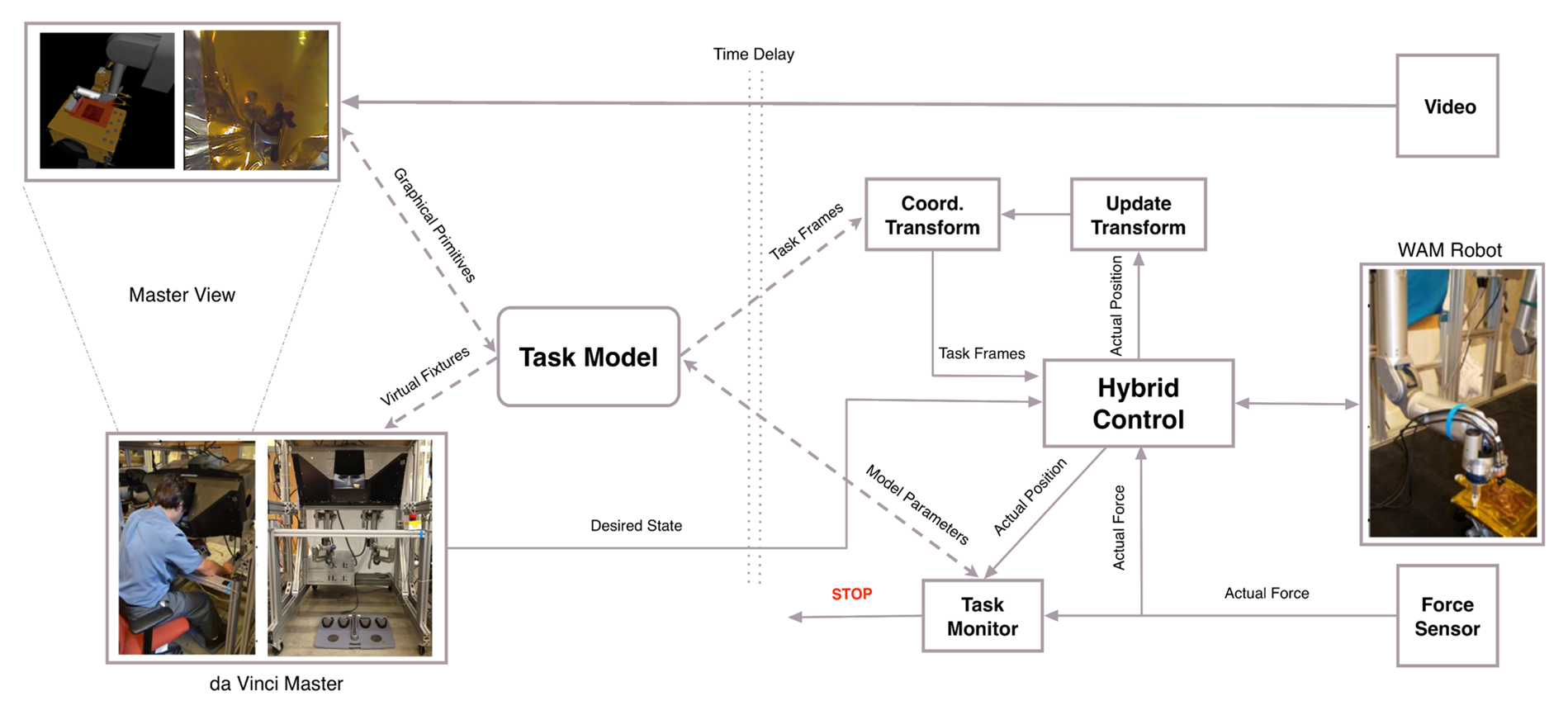

Robotic Telemanipulation with Time Delay (“Satellite Surgery”)

The goal of this project is to develop a telemanipulation environment for on-orbit manipulation and servicing of space hardware. Purely autonomous robotic manipulation systems typically fail unless the environment is carefully designed for robotic servicing. In the case of most existing spacecraft, we need to design robotic manipulation systems that can work with environments not originally designed for robotic servicing.

Our main project is to develop and demonstrate a telerobotic system that can assist with removal of the insulating blanket flap that covers the spacecraft’s fuel access port. This flap is taped over the primary insulating blanket that covers the rest of the spacecraft. It is necessary to remove the flap without damaging the primary blanket or satellite.

» Private project page (login required)

Active: 2011 – Present

Augmented Reality Head-Mounted Display (HMD) Research

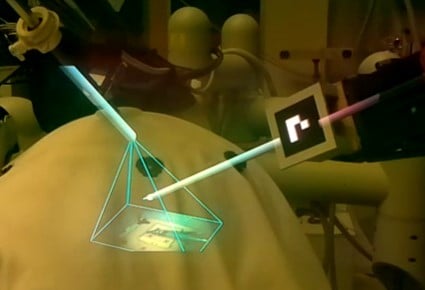

We have several completed and ongoing research projects that involve augmented reality (AR) on a head-mounted display (HMD). Our initial work integrated an optical tracking system with an HMD to create a “head-mounted navigation system” for neurosurgery. As HMD technology improved, we explored the use of AR for training of combat medics. During this project, we also developed an improved calibration method for optical see-through HMDs. Our current projects involve AR for ventriculostomy (left figure), for the bedside assistant in robotic surgery (right figure), and as an interface to control a prosthetic hand. The lab has several HMDs, including Microsoft Hololens (Versions 1 and 2) and Epson Moverio BT-200/BT-300.

Active: 2010 – Present

Completed or Currently Inactive Research

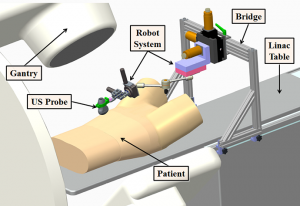

Robotic Assisted Ultrasound-Guided Radiation Therapy

The goal of this project is to construct a robotically-controlled, integrated 3D x-ray and ultrasound imaging system to guide radiation treatment of soft-tissue targets. We are especially interested in registration between the ultrasound images and the CT images (from both the simulator and accelerator), because this enables the treatment plan and overall anatomy to be fused with the ultrasound image. However, ultrasound image acquisition requires relatively large contact forces between the probe and patient, which leads to tissue deformation. One approach is to apply a deformable (nonrigid) registration between the ultrasound and CT, but this is technically challenging. Our approach is to apply the same tissue deformation during CT image acquisition, thereby removing the need for a non-rigid registration method. We use a model (fake) ultrasound probe to avoid the CT image artifacts that would result from using a real probe. Thus, the requirement for our robotic system is to enable an expert ultrasonographer to place the probe during simulation, record the relevant information (e.g., position and force), and then allow a less experienced person to use the robot system to reproduce this placement (and tissue deformation) during the subsequent fractionated radiotherapy sessions.

Not Active: 2011 – 2017

Constrained Optimization for Control of a Redundant Robot

We are investigating the use of constrained optimization to determine the optimal joint configurations of a redundant robot (i.e., one with more than six degrees-of-freedom) as it performs a particular task. The solution is optimal with respect to the objectives and constraints formulated for the task. As a motivating example, consider robot-assisted total joint replacement, where the robot must mill a precise shape in a bone. While the obvious objective is to minimize the deviation from the desired path, additional objectives and/or constraints can be introduced to avoid joint limits and singularities, stay within joint velocity and acceleration constraints, avoid collisions with obstacles, and other goals.

Not Active: 2014 – 2018

Small Animal Radiation Research Platform (SARRP)

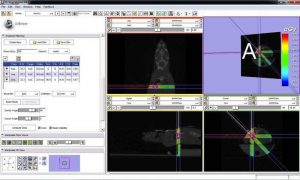

In cancer research, well characterized small animal models of human cancer, such as transgenic mice, have greatly accelerated the pace of development of cancer treatments. The goal of the Small Animal Radiation Research Platform (SARRP) is to make those same models available for the development and evaluation of novel radiation therapies. SARRP can deliver high resolution, sub millimeter, optimally planned conformal radiation with on-board cone-beam CT (CBCT) guidance. SARRP has been licensed to Xstrahl (previously Gulmay Medical) and is described on the Xstrahl Small Animal Radiation Research Platform page. Recent developments include a Treatment Planning System (TPS) module for 3D Slicer that allows the user to specify a treatment plan consisting of x-ray beams and conformal arcs and uses a GPU to quickly compute the resulting dose volume. Current research efforts on focused on inverse treatment planning, where the user specifies dose constraints for the targets and organs at risk and the system determines the beam locations and weights.

Not Active: 2005 – 2018

Image Guided Neurosurgery Robot

We are investigating the use of robot systems to assist with image-guided neurosurgical procedures. Our initial approach was to use a cooperatively-controlled robot with virtual fixtures to prevent accidental damage to critical structures during the skull-base drilling procedure. We attached the cutting tool to the robot end-effector and operate the robot in a cooperative control mode, where robot motion is determined from the forces and torques applied by the surgeon. We employed virtual fixtures to constrain the motion of the cutting tool so that it remains in the safe zone that was defined on a preoperative CT scan. This system was implemented using a research version of the Neuromate robot, with a wrist-mounted force/torque sensor to enable cooperative control (left figure). Our current research is focused on the integration of photo-acoustic ultrasound to detect critical structures, such as the carotid artery, behind the bone being drilled for endonasal skull base surgery. In this system, the laser is mounted on the drill (via an optical fiber) and the 2D ultrasound probe is placed elsewhere on the skull. We have implemented a prototype telerobotic system using the da Vinci Research Kit (right figure).

Not Active: 2006 – 2017

Prostate Brachytherapy Robot

In collaboration with Gabor Fichtinger (Queen’s University), E. Clif Burdette (Acoustic MedSystems, Inc.), Gernot Kronreif (Profactor GmbH) and Iulian Iordachita (JHU), we developed a robot system to replace the conventional template in transrectal ultrasound (TRUS) guided prostate low dose rate (LDR) brachytherapy. Our approach was to introduce the robot without changing the established clinical hardware, workflow and calibration procedures, thereby enabling us to more easily transition from research to clinical trials. The system is integrated with an FDA-approved treatment planning system (Interplant) and its associated ultrasound unit. We provide a small 4-axis robot that mounts on the ultrasound unit and is interchangeable with the conventional template. The robot positions and orients a needle guide, so the final act of needle insertion is left to the clinician. This preserves the haptic feedback that the clinician is accustomed to, while also reducing the level of technical risk. The advantages of the robot system are that it provides a continuum of needle positions and angulations, in contrast to the template which has a fixed 5 mm grid and only allows parallel needles. We successfully performed a Phase-I clinical feasibility and safety trial with 5 patients, under an approved IRB protocol, in 2007. Since then, we have developed a needle quick release mechanism to enable insertion of multiple needles prior to depositing the radioactive sources.

Not Active: 2004 – 2017

Software System Safety for Medical and Surgical Robotics

As the recent robot systems tend to operate closely with humans, the safety of recent robot systems is getting more attention in the robotics community, both in academia and industry. However, safety has not received much attention within the medical and surgical robotics domain, despite its crucial importance. Another practical issue is that building medical and surgical robot systems with safety is not a trivial process because typical computer-assisted intervention applications use different sets of devices such as haptic interfaces, tracking systems, imaging systems, robot controllers, and other devices. Furthermore, this increases the scale and complexity of a system, making it harder and harder to achieve both functional and non-functional requirements of the system.

This project investigates the issue of safety of medical robot systems with the consideration of run-time aspects of component-based software systems. The goal is to improve the safety design process and to facilitate the development of robot systems with the consideration of safety, thereby building safe medical robot systems in a more effective, verifiable, and systematic manner. Our first step is to establish a conceptual framework that can systematically capture and present the design of safety features. The next step is to develop a software framework that can actually implement and realize our approach within component-based robot systems. As validation, we apply our approach and the developed framework to an actual commercial robot system for orthopaedic surgery, called the ROBODOC System (see below).

For this research, we use the cisst libraries, an open source C++ component-based software framework, and Surgical Assistant Workstation (SAW), a collection of reusable components for computer-assisted interventional systems.

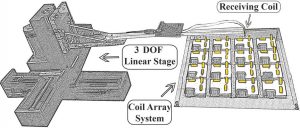

Hybrid Surgical Tracking System

This began as a joint project with the Fraunhofer IPA in Stuttgart, Germany, where the goal was to develop surgical tracking technology that is accurate, robust against environmental disturbances, and does not require line-of-sight. In this project, we are developing a hybrid tracking approach, using electromagnetic tracking (EMT) and inertial sensing, to create a tracking system that has high accuracy, no line-of-sight requirement, and minimal susceptibility to environmental effects such as nearby metal objects. Our initial experiments utilized an off-the-shelf EMT and inertial measurement unit (IMU), but this introduced two severe limitations in our sensor fusion approach: (1) the EMT and IMU readings were not well synchronized, and (2) we only had access to the final results from each system (e.g., the position/orientation from the EMT) and thus could not consider sensor fusion of the raw signals. We therefore developed custom hardware to overcome these limitations.

Not Active: 2008 – 2015

ROBODOC® System for Orthopaedic Surgery

This was a collaborative research project with Curexo Technology Corporation (now Think Surgical), developer and manufacturer of the ROBODOC® System.

For this project, we built the entire software stack of the robot system for research, from an interface to a commercial low-level motor controller to various applications (e.g., robot calibration, surgical workflow), using real-time operating systems with the component-based software engineering approach. We use the cisst package and Surgical Assistant Workstation components as the component-based framework.

This system is used to investigate several topics such as the exploration of kinematic models, new methods for calibration of robot kinematic parameters, and safety research of component-based medical and surgical robot systems. We also use this system for graduate-level course project such as the Enabling Technology for Robot Assisted Ultrasound Tomography project from 600.446 Advanced Computer Integrated Surgery (CIS II).

Completed: 2009 – 2015

Elastography with LARSnake Robot

The aim of this project is to control the dexterous snake-like robot under ultrasound imaging guidance for ultrasound elastography. The image guidance is through an ultrasonic micro-array attached at the tip of the snake-like robot. The snake-like unit with the ultrasonic micro-array can be seen in the Figure on the left.In the robotic system developed the snake-like unit is attached to the tip of IBM Laparoscopic Assistant for Robotic Surgery (LARS) system.

The main role of the LARSnake system in this project is to generate precise palpation motions along the imaging plane of the ultrasound array. With an ultrasonic micro-array B-mode images of a tissue can be obtained however,if at hand compressed and uncompressed images of the tissue are present, than one can obtain the elastography images(strain images) of the malignant tissue which is the main purpose of this project.

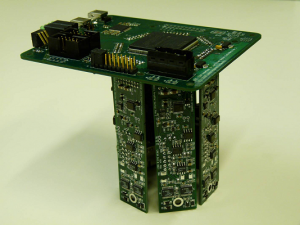

Scalable IEEE 1394 (FireWire)-Based Motion Controller for the Snake Robot

Research in surgical robots often calls for multi-axis controllers and other I/O hardware for interfacing various devices with computers. As the need for dexterity is increased, the hardware and software interfaces required to support additional joints can become cumbersome and impractical. To facilitate prototyping of robots and experimentation with large numbers of axes, it would be beneficial to have controllers that scale well in this regard.

High speed serial buses such as IEEE 1394 (FireWire), and low-latency field programmable gate arrays make it possible to consolidate multiple data streams into a single cable. Contemporary computers running real-time operating systems have the ability to process such dense data streams, thus motivating a centralized processing, distributed I/O control architecture. This is particularly advantageous in education and research environments.

This project involves the design of a real-time (one kilohertz) robot controller inspired by these motivations and capitalizing on accessible yet powerful technologies. The device is developed for the JHU Snake Robot, a novel snake-like manipulator designed for minimally invasive surgery of the throat and upper airways.

Completed: 2008 – 2011 (see Open Source Mechatronics for subsequent developments)

Image-guided robot for small animal research

Collaborative effort with Memorial Sloan Kettering Cancer Center