HMD for the First Assistant in robotic surgery

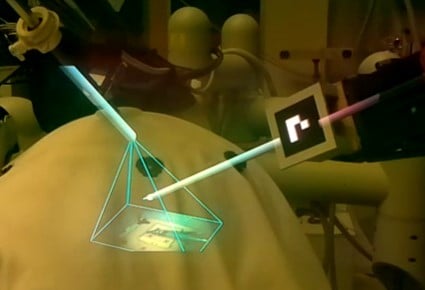

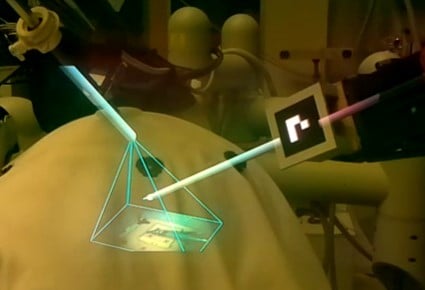

In robotic-assisted laparoscopic surgery, the first assistant (FA) stands at the bedside assisting the intervention, while the surgeon sits at the console teleoperating the robot. We are developing ARssist, an augmented reality application based on an optical see-through head-mounted display, to aid the FA. ARssist provides visualization of the robotic instruments and endoscope “inside” the patient body, and various ways to render the stereo endoscopy on the head-mounted display, as shown in the Figure below.

Publications

Greene, Nicholas; Luo, Wenkai; Kazanzides, Peter

dVPose: Automated Data Collection and Dataset for 6D Pose Estimation of Robotic Surgical Instruments Proceedings Article

In: IEEE Intl. Symp. on Medical Robotics (ISMR), 2023.

@inproceedings{GreeneISMR2023,

title = {dVPose: Automated Data Collection and Dataset for 6D Pose Estimation of Robotic Surgical Instruments},

author = {Nicholas Greene and Wenkai Luo and Peter Kazanzides},

year = {2023},

date = {2023-04-01},

urldate = {2023-04-01},

booktitle = {IEEE Intl. Symp. on Medical Robotics (ISMR)},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Qian, Long; Zhang, Xiran; Deguet, Anton; Kazanzides, Peter

ARAMIS: Augmented Reality Assistance for Minimally Invasive Surgery Using a Head-Mounted Display Proceedings Article

In: Medical Image Computing and Computer-Assisted Intervention (MICCAI), pp. 74-82, 2019.

@inproceedings{Qian2019Miccai,

title = {ARAMIS: Augmented Reality Assistance for Minimally Invasive Surgery Using a Head-Mounted Display},

author = {Long Qian and Xiran Zhang and Anton Deguet and Peter Kazanzides},

year = {2019},

date = {2019-10-01},

booktitle = {Medical Image Computing and Computer-Assisted Intervention (MICCAI)},

pages = {74-82},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Qian, Long; Deguet, Anton; Wang, Zerui; Liu, Yun-Hui; Kazanzides, Peter

Augmented Reality Assisted Instrument Insertion and Tool Manipulation for the First Assistant in Robotic Surgery Proceedings Article

In: IEEE Intl. Conf. on Robotics and Automation (ICRA), pp. 5173-5179, 2019.

@inproceedings{Qian2019,

title = {Augmented Reality Assisted Instrument Insertion and Tool Manipulation for the First Assistant in Robotic Surgery},

author = {Long Qian and Anton Deguet and Zerui Wang and Yun-Hui Liu and Peter Kazanzides},

year = {2019},

date = {2019-05-01},

booktitle = {IEEE Intl. Conf. on Robotics and Automation (ICRA)},

pages = {5173-5179},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Qian, Long; Deguet, Anton; Kazanzides, Peter

ARssist: augmented reality on a head-mounted display for the first assistant in robotic surgery Journal Article

In: IET Healthcare Technology Letters, vol. 5, no. 5, pp. 194-200, 2018.

@article{Qian2018a,

title = {ARssist: augmented reality on a head-mounted display for the first assistant in robotic surgery},

author = {Long Qian and Anton Deguet and Peter Kazanzides},

doi = {http://dx.doi.org/10.1049/htl.2018.5065},

year = {2018},

date = {2018-10-01},

journal = {IET Healthcare Technology Letters},

volume = {5},

number = {5},

pages = {194-200},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

HMD for Training of Medical Procedures

We have been researching the application of augmented reality overlay on an HMD to support training of medical procedures. Our initial project was to support training of combat medics; for example, to perform a needle decompression procedure in response to a diagnosed tension pneumothorax. We developed application software, using the Unity3D framework, that reads an augmented workflow from a JSON file. More recently, we are applying this to training of neurosurgical procedures, such as ventriculostomy.

Publications

Azimi, Ehsan; Winkler, Alexander; Tucker, Emerson; Qian, Long; Doswell, Jayfus; Navab, Nassir; Kazanzides, Peter

Can Mixed-Reality Improve the Training of Medical Procedures? Proceedings Article

In: IEEE Engin. in Medicine and Biology Conf. (EMBC), pp. 4065-4068, 2018.

@inproceedings{Azimi2018b,

title = {Can Mixed-Reality Improve the Training of Medical Procedures?},

author = {Ehsan Azimi and Alexander Winkler and Emerson Tucker and Long Qian and Jayfus Doswell and Nassir Navab and Peter Kazanzides},

year = {2018},

date = {2018-07-01},

booktitle = {IEEE Engin. in Medicine and Biology Conf. (EMBC)},

pages = {4065-4068},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Azimi, Ehsan; Winkler, Alexander; Tucker, Emerson; Qian, Long; Sharma, Manyu; Doswell, Jayfus; Navab, Nassir; Kazanzides, Peter

Evaluation of Optical See-Through Head-Mounted Displays in Training for Critical Care and Trauma Proceedings Article

In: IEEE Virtual Reality (VR), pp. 96-97, 2018.

@inproceedings{Azimi2018a,

title = {Evaluation of Optical See-Through Head-Mounted Displays in Training for Critical Care and Trauma},

author = {Ehsan Azimi and Alexander Winkler and Emerson Tucker and Long Qian and Manyu Sharma and Jayfus Doswell and Nassir Navab and Peter Kazanzides},

year = {2018},

date = {2018-03-01},

booktitle = {IEEE Virtual Reality (VR)},

pages = {96-97},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Calibration of Optical See-Through HMDs

For an optical see-through HMD, it is necessary to calibrate the display to the user’s eyes, so that augmented overlays are displayed in the correct locations with respect to the physical world. We have developed new methods for calibration of both mono and stereo display systems.

Publications

Azimi, Ehsan; Qian, Long; Kazanzides, Peter; Navab, Nassir

Robust Optical See-Through Head-Mounted Display Calibration: Taking Anisotropic Nature of User Interaction Errors into Account Proceedings Article

In: IEEE Virtual Reality, pp. 219-220, Los Angeles, CA, 2017.

@inproceedings{Azimi2017a,

title = {Robust Optical See-Through Head-Mounted Display Calibration: Taking Anisotropic Nature of User Interaction Errors into Account},

author = {Azimi, Ehsan and Qian, Long and Kazanzides, Peter and Navab, Nassir},

year = {2017},

date = {2017-03-01},

booktitle = {IEEE Virtual Reality},

pages = {219-220},

address = {Los Angeles, CA},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Qian, Long; Winkler, Alexander; Fuerst, Bernhard; Kazanzides, Peter; Navab, Nassir

Modeling Physical Structure as Additional Constraints for Stereoscopic Optical See-Through Head-Mounted Display Calibration Proceedings Article

In: IEEE Intl. Symp. on Mixed and Augmented Reality (ISMAR), pp. 154-155, Merida, Mexico, 2016.

@inproceedings{Qian2016b,

title = {Modeling Physical Structure as Additional Constraints for Stereoscopic Optical See-Through Head-Mounted Display Calibration},

author = {Qian, Long and Winkler, Alexander and Fuerst, Bernhard and Kazanzides, Peter and Navab, Nassir},

year = {2016},

date = {2016-09-01},

booktitle = {IEEE Intl. Symp. on Mixed and Augmented Reality (ISMAR)},

pages = {154-155},

address = {Merida, Mexico},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Qian, Long; Winkler, Alexander; Fuerst, Bernhard; Kazanzides, Peter; Navab, Nassir

Reduction of Interaction Space in Single Point Active Alignment Method for Optical See-Through Head-Mounted Display Calibration Proceedings Article

In: IEEE Intl. Symp. on Mixed and Augmented Reality (ISMAR), pp. 156-157, Merida, Mexico, 2016.

@inproceedings{Qian2016a,

title = {Reduction of Interaction Space in Single Point Active Alignment Method for Optical See-Through Head-Mounted Display Calibration},

author = {Qian, Long and Winkler, Alexander and Fuerst, Bernhard and Kazanzides, Peter and Navab, Nassir},

year = {2016},

date = {2016-09-01},

booktitle = {IEEE Intl. Symp. on Mixed and Augmented Reality (ISMAR)},

pages = {156-157},

address = {Merida, Mexico},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Sensor Fusion to Overcome Occlusions in HMD Applications

Augmented reality overlays require a method to track the position of the user’s head (HMD) with respect to objects in the environment. For example, when overlaying tumor margins for image-guided neurosurgery, it is necessary to know the location of the HMD with respect to the patient. This tracking is generally provided by an optical tracking system (i.e., one or more cameras), which can be mounted on the HMD (“inside-out” tracking) or externally (“outside-in” tracking). In either case, it is possible for the camera view to be fully or partially occluded. We developed a method to handle these occlusions by performing sensor fusion of measurements from the optical tracker and from an inertial measurement unit (IMU). The method estimates the bias of the inertial sensors when the optical tracker provides full 6 degree-of-freedom (DOF) pose information. As long as the position of at least one marker can be tracked by the optical system, the 3-DOF position can be combined with the orientation estimated from the inertial measurements to recover the full 6-DOF pose information. When all the markers are occluded, the position tracking relies on the inertial sensors which are bias-corrected by the optical tracking system. Our experiments demonstrate that this approach can effectively handle long periods (at least several minutes) of partial occlusion and relatively short periods (up to a few seconds) of total occlusion.

Publications

He, Changyu; Kazanzides, Peter; Sen, Hasan Tutkun; Kim, Sungmin; Liu, Yue

An Inertial and Optical Sensor Fusion Approach for Six Degree-of-Freedom Pose Estimation Journal Article

In: Sensors, vol. 15, no. 7, pp. 16448-16465, 2015.

@article{He2015,

title = {An Inertial and Optical Sensor Fusion Approach for Six Degree-of-Freedom Pose Estimation},

author = {He, Changyu and Kazanzides, Peter and Sen, Hasan Tutkun and Kim, Sungmin and Liu, Yue},

year = {2015},

date = {2015-07-01},

journal = {Sensors},

volume = {15},

number = {7},

pages = {16448-16465},

publisher = {MDPI},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

He, Changyu; Sen, H. Tutkun; Kim, Sungmin; Sadda, Praneeth; Kazanzides, Peter

Fusion of Inertial Sensing to Compensate for Partial Occlusions in Optical Tracking Systems Proceedings Article

In: MICCAI Workshop on Augmented Environments for Computer-Assisted Interventions (AE-CAI), pp. 60-69, Springer LNCS 8678, Boston, MA, 2014.

@inproceedings{He2014,

title = {Fusion of Inertial Sensing to Compensate for Partial Occlusions in Optical Tracking Systems},

author = {He, Changyu and Sen, H. Tutkun and Kim, Sungmin and Sadda, Praneeth and Kazanzides, Peter},

year = {2014},

date = {2014-09-01},

booktitle = {MICCAI Workshop on Augmented Environments for Computer-Assisted Interventions (AE-CAI)},

pages = {60-69},

publisher = {Springer LNCS 8678},

address = {Boston, MA},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

HMD for Image-Guided Neurosurgery

In surgery, the goal is to enable a surgeon, wearing the HMD, to perform image-guided surgery while keeping hands and eyes focused on the patient. The developed system does not display high-resolution preoperative images on the HMD, but rather shows simple graphics derived from the navigation information. For example, the navigation information can include models of the patient anatomy that are obtained from preoperative images, such as biopsy target points and tumor outlines. The system presents one or more “picture-in-picture” virtual views of the preoperative data and shows the positions of tracked instruments with respect to these views.

Publications

Azimi, Ehsan; Niu, Zhiyuan; Stiber, Maia; Greene, Nicholas; Liu, Ruby; Molina, Camilo; Huang, Judy; Huang, Chien-Ming; Kazanzides, Peter

An Interactive Mixed Reality Platform for Bedside Surgical Procedures Proceedings Article

In: Medical Image Computing and Computer-Assisted Intervention (MICCAI), pp. 65-75, 2020.

@inproceedings{Azimi2020b,

title = {An Interactive Mixed Reality Platform for Bedside Surgical Procedures},

author = {Ehsan Azimi and Zhiyuan Niu and Maia Stiber and Nicholas Greene and Ruby Liu and Camilo Molina and Judy Huang and Chien-Ming Huang and Peter Kazanzides},

year = {2020},

date = {2020-10-01},

booktitle = {Medical Image Computing and Computer-Assisted Intervention (MICCAI)},

pages = {65-75},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Azimi, Ehsan; Liu, Ruby; Molina, Camilo; Huang, Judy; Kazanzides, Peter

Interactive Navigation System in Mixed-Reality for Neurosurgery Proceedings Article

In: IEEE Virtual Reality (VR), pp. 783-784, 2020.

@inproceedings{Azimi2020a,

title = {Interactive Navigation System in Mixed-Reality for Neurosurgery},

author = {Ehsan Azimi and Ruby Liu and Camilo Molina and Judy Huang and Peter Kazanzides},

year = {2020},

date = {2020-03-01},

booktitle = {IEEE Virtual Reality (VR)},

pages = {783-784},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Azimi, Ehsan; Molina, Camilo; Chang, Alexander; Huang, Judy; Huang, Chien-Ming; Kazanzides, Peter

Interactive Training and Operation Ecosystem for Surgical Tasks in Mixed Reality Proceedings Article

In: MICCAI Workshop on OR 2.0 Context-Aware Operating Theaters, pp. 20-29, 2018.

@inproceedings{Azimi2018c,

title = {Interactive Training and Operation Ecosystem for Surgical Tasks in Mixed Reality},

author = {Ehsan Azimi and Camilo Molina and Alexander Chang and Judy Huang and Chien-Ming Huang and Peter Kazanzides},

year = {2018},

date = {2018-09-01},

booktitle = {MICCAI Workshop on OR 2.0 Context-Aware Operating Theaters},

pages = {20-29},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Sadda, Praneeth; Azimi, Ehsan; Jallo, George; Doswell, Jayfus; Kazanzides, Peter

Surgical navigation with a head-mounted tracking system and display Proceedings Article

In: Medicine Meets Virtual Reality (MMVR), San Diego, CA, 2013.

@inproceedings{Sadda2013,

title = {Surgical navigation with a head-mounted tracking system and display},

author = {Sadda, Praneeth and Azimi, Ehsan and Jallo, George and Doswell, Jayfus and Kazanzides, Peter},

year = {2013},

date = {2013-02-01},

booktitle = {Medicine Meets Virtual Reality (MMVR)},

address = {San Diego, CA},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Azimi, Ehsan; Doswell, Jayfus; Kazanzides, Peter

Augmented reality goggles with an integrated tracking system for navigation in neurosurgery Proceedings Article

In: IEEE Virtual Reality, pp. 123-124, Orange County, CA, 2012.

@inproceedings{Azimi2012,

title = {Augmented reality goggles with an integrated tracking system for navigation in neurosurgery},

author = {Ehsan Azimi and Jayfus Doswell and Peter Kazanzides},

year = {2012},

date = {2012-03-01},

booktitle = {IEEE Virtual Reality},

pages = {123-124},

address = {Orange County, CA},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Press Coverage

JHU Hub Article, ‘Mixed Reality’ Makes for Better Surgeons, May 17, 2019

WUSA, Channel 9 (Washington DC), TV News Story, July 31, 2019